Fundamentals of Image Processing for Terrain Relative Navigation (TRN)

Discipline: Guidance, Navigation and Control (SLaMS Webcast Series)

Presented via Webcast on April 23, 2014

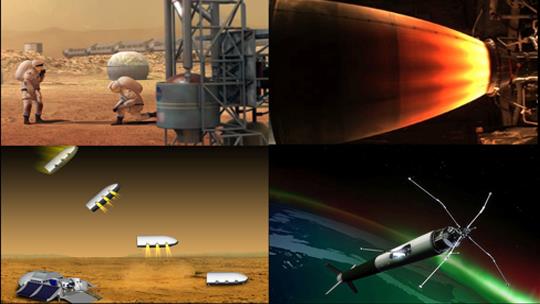

Current robotic planetary landers do not identify landmarks for navigation or detect landing hazards for safe landing. Typically they just measure altitude and velocity and land in a relatively large landing ellipse that is free from hazards. Since the best science is usually located near terrain relief that exposes material from of different ages, this “blind” landing approach limits science return. For example, the Mars Science Laboratory Curiosity rover landed in with a landing ellipse tens of kilometers wide in a flat region of Gale crater. Curiosity is currently driving to Mount Sharp, which is the primary science destination.

There are two complimentary technologies being developed to enable access to more extreme terrain during landing: Terrain Relative Navigation (TRN) for accurate position estimation and Hazard Detection for avoiding small, unknown hazards. During TRN the lander automatically recognizes landmarks and computes a map relative position, which can be used in two different ways. First, if there is enough fuel, the lander is guided to a pin-point landing (within 100m of the target). If the vehicle is limited on fuel, then the landing ellipse is populated with safe landing sites and the lander is guided to the safest reachable site. This multi-point safe landing strategy enables selection of landing ellipses with large distributed hazards. Both applications improve science return by placing the lander closer to terrain relief.

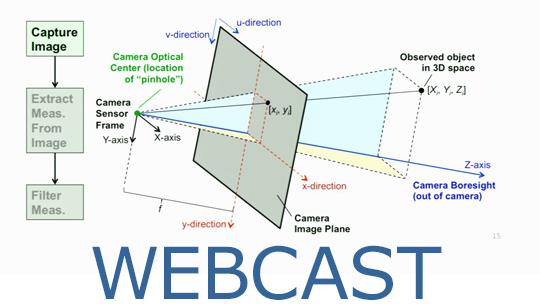

This presentation will describe the image processing building blocks for development of a TRN system for planetary landing including: image warping, feature selection and image correlation. It will then describe the Lander Vision System (LVS) that integrates these components into a real-time image-based terrain relative navigation system and show results from a recent field test of the LVS. The presentation will conclude by showing how the same image processing techniques can be applied to lidar data to enable TRN under any lighting conditions.